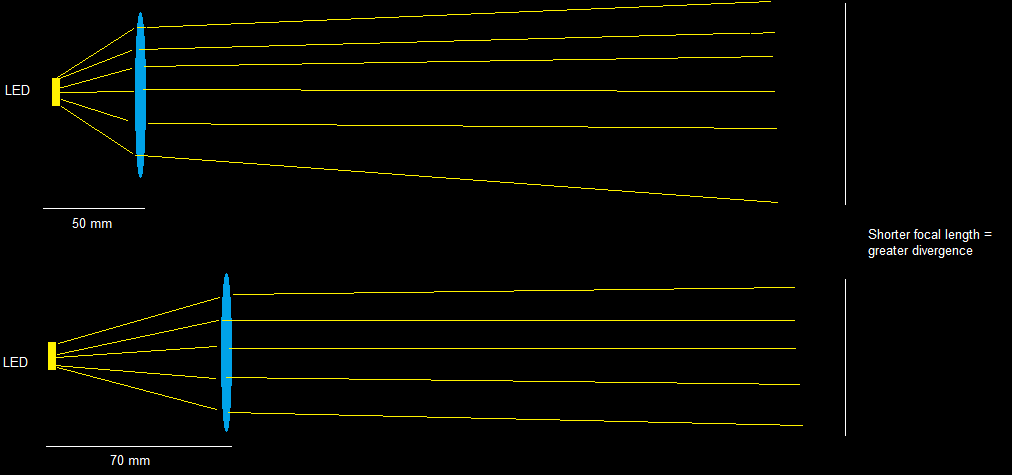

So I got 2 converging Fresnel lenses, one with 50 mm focal length and other 70 mm.

My experiment looks like this:

When trying to focus and SMD LED emitter into a narrow beam (sticking to lenses focal length accordingly), the one with shorter focal length gives bigger divergence (measuring spot on the wall).

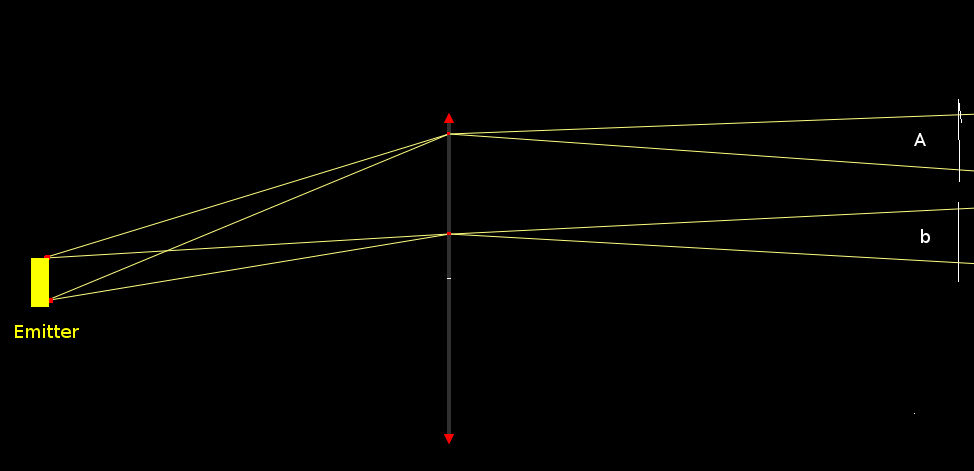

I assume this has something to do with smaller angle of incidence, but running the simulation with "ideal" lens shows no difference in divergence between two cases with different angle of incidence.

(On the figure 2, distance between A and b is equal)

Also, I am not sure whether they are aspherical (does it apply to fresnels?) and if no maybe this is the issue?

Best Answer

When the LED is placed $50mm$ from the lens, its etendue (the product of its size and the solid angle into which the LED radiates light, subtended by the lens) is greater than the etendue of the same LED placed $70mm$ from the lens of, presumably, the same size.

Therefore, due to the conservation of etendue, the divergence (solid angle) of the beam after the lens will be greater in the first scenario, i.e., for the $50mm$ lens.