I tried asking this in electronics as a question related to oscillators, but I wasn't able to get a satisfactory answer. I think the more math-y types here may shed some additional light on the phenomenon I am observing.

I am using two clocks (measured-clock and reference-clock) to measure the time of one relative to the other. I plot the accumulated difference between the clocks (in microseconds) vs. the total time elapsed on the reference-clock (in seconds). After collecting an hours' worth of data, I can perform a linear regression to determine the difference in frequency between the clocks.

The linear regression has $r^2 = 0.9999$, so it's about as perfect as you can get. The slope of the line is, for example, $-36.3$. This implies that for every second of reference-clock time, the measured-clock falls 36.3 microseconds behind.

I then tune the measured-clock so that it has an extra 36.3 microseconds per second. I then re-run my analysis, expecting a very flat slope and poor $r^2$. Instead, I get another $r^2 = 0.9999$, with a slope of about $-1.2$. If I adjust for an additional 1.2 us/s (bringing the total to 37.5 us/s), I then get the satisfactory "flat slope and poor $r^2$".

It appears as if the first linear regression was wrong, despite the perfect $r^2$. Iteratively calculating linear regressions while $r^2$ is very high will arrive at the correct answer, so it seems as if there is some systemic error that I am unaware of. What am I doing wrong?

EDIT:

My initial clock frequency estimate is 14,318,180 Hz (the HPET timer). From iterative regressions, I can determine that the real clock frequency should be 14,318,712 Hz. This is an additional 532 ticks per second, which implies that the actual error is $-37.156$ us/s.

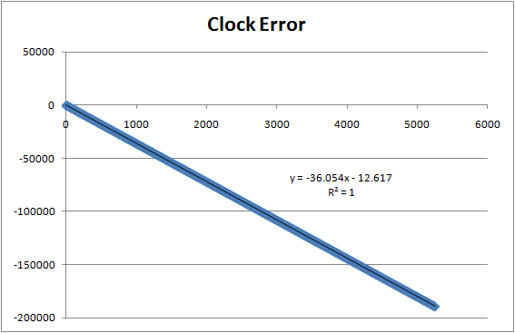

Here is the initial regression. The X axis is the elapsed time of the reference clock, in seconds. The Y axis is the difference between the elapsed time on the measured clock and the elapsed time on the reference clock, in microseconds (i.e. the error between the two clocks as a function of the elapsed time on the reference clock). The reference clock is precise, but not accurate, however I want to match any inaccuracy in the reference clock so that's okay.

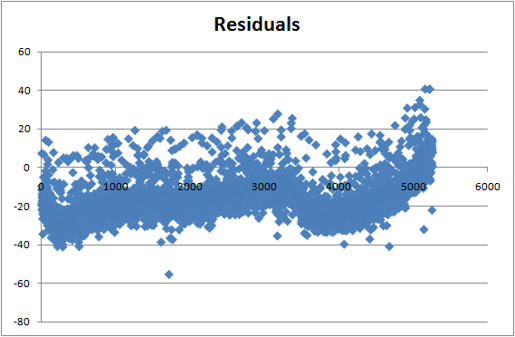

Here is a plot of the residuals for that regression.

The initial regression suggests an error of $-36.054$ us/s. This is an additional 516 ticks per second, suggesting that my HPET is really $14,318,696$ Hz. As mentioned above, however, this value is not correct.

Best Answer

You are violating the independence assumption of linear regression. Your observations are correlated with each other. You cannot use standard linear regression.